In my day job, we work with transformer models a lot, so I’m always interested in how they advance. I played around with the OpenAI Chatbot and found the results compelling. Asking it to generate a coding tutorial (“How would you create an event sourcing application in PHP 8?") was pretty great, though I recognized sentences it generated as coming from a couple of the more popular human-written tutorials on that subject.

The thing to remember about large language models is that they are all about probabilities and correlation. A large enough model “knows” that in the presence of certain other words – not exactly semantic context, but close enough for jazz – the word “python” and “snake” are equivalent, while in other contexts, “python” and “programming language” are related. Given the presence of words in the prompt, the model can predict what words should probably follow in a response.

This can lead to sentences that read like actual thought. In many cases, they are the product of thought, just not the machine’s. They’re the product of human thought, repeated enough times that patterns appear in the data. But probabaility isn’t certainty, and the machine can generate perfectly reasonable sentences and plausible “arguments” that are completely incorrect.

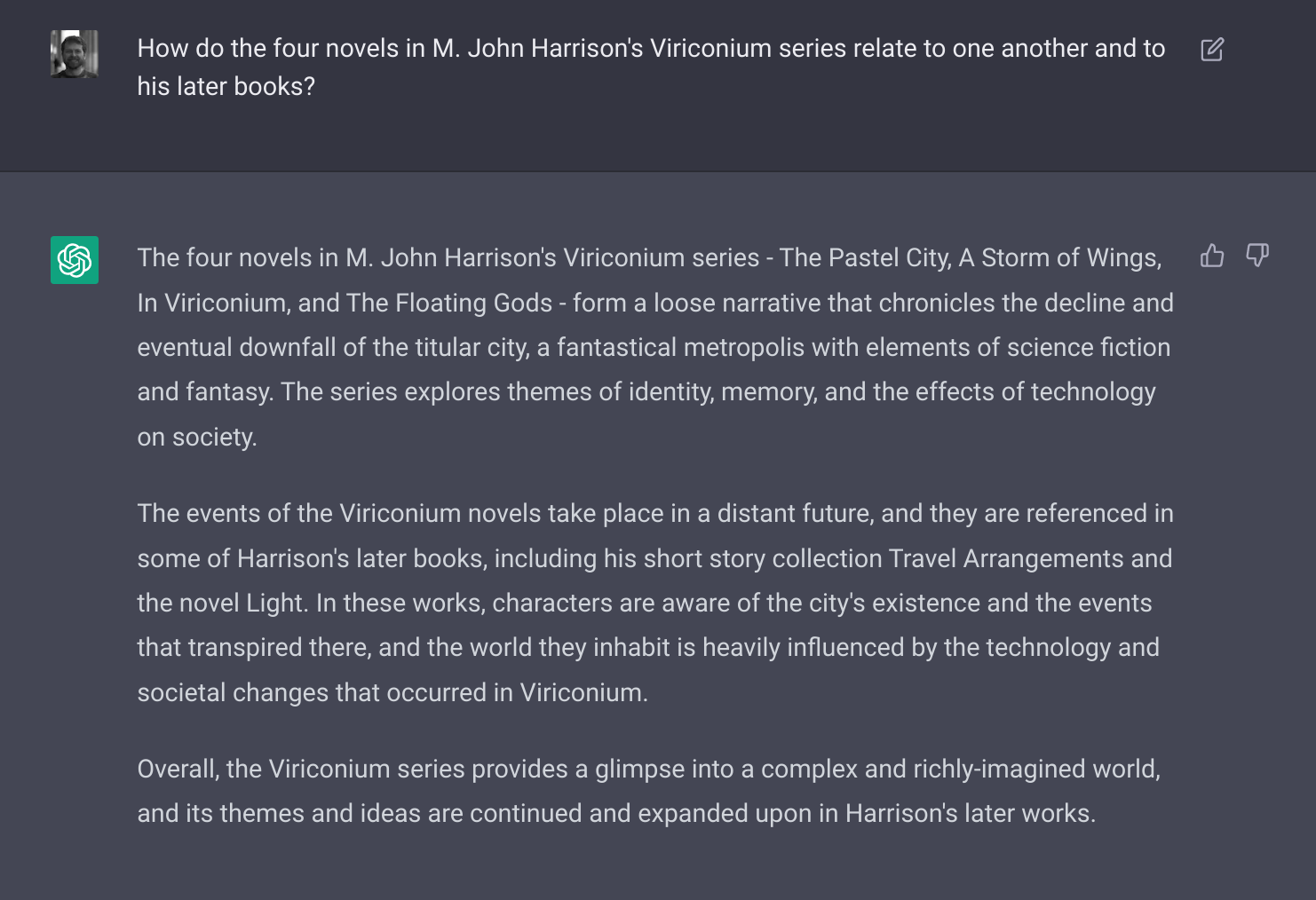

To wit: Since Viriconium is on my mind lately, I asked the machine how that book relates to Harrison’s later works. The result was convincing enough that I had to take a look at reviews of the books in question to be sure I hadn’t missed something on my recent reading of Light and Travel Arrangements. The concluding paragraph here is 100% completely incorrect, and when asking the chatbot to try again, it generated a response that said the exact opposite.

Any time you play with one of these technologies, I advise you to keep in mind: The model does not think. It only predicts based on what it has seen in the past. A useful illusion, but still an illusion.